- Part 1 - Design

- Part 2 - Where to start

- Part 3 - "Smart" Appliances

- Part 4 - Ceiling Fans

- Part 5 - Bin Day

- Part 6 - Lighting

- Part 7 - Motorised Gate

- Part 8 - Motorised Blinds

- Part 9 - Door Locks

- Part 10 - Debugging!

- Part 11 - House sitter mode

- Part 12 - Backups

- Part 13 - Wall Mounted Dashboards

- Part 14 - Motion, Occupancy, and Presence

- Part 15 - Generative AI and Notifications (this post)

- Part 16 - Seasonal Automation's

Like many people, I’ve been diving into Generative AI (GenAI) over the past 12 or so months and looking at how to use it in the sorts of solutions we can build. I’m also the kind of tinkerer that is looking for how to use technology in weird and wonderful ways. So, I’ve been looking at how to use GenAI in my smart home.

Before we get into that part of the story, a little bit of context around something I was doing recently. For the better part of a year I’ve had a daily notification that runs for our kids which tells them what they have on that day at school, whether it’s their library day, they have sport, after school care, etc. This runs at 8.30am, which is just before we leave the house and, well, it’s been a bit of a “Pavlov’s bell” for them. They hear the notification and they know what’s coming up that day and that it’s time to be ready to leave.

The thing is, this message is pretty static, I run a template that looks at a bunch of helpers setup in Home Assistant and then generates a message. It’s a bit boring because it’s very static, like “

Enter GenAI

After upgrading the hardware my Home Assistant instance runs on (from a Pi4 to a NUC), I have a bit more power to play with, so I had been running some Small Language Models (SLMs) on the NUC to see what I could do with them. The NUC itself is too underpowered to be used as a local GenAI server to back the Assistants part of Home Assistant (I tried, Phi3-mini doesn’t have a large enough token cap for the system message, let alone a user message, and anything larger takes so long to response that it’s completely impractical 🤣), but if it’s running a SLM that is only serving responses on occasions, well that should be fine.

Hello Ollama

The first thing we need to do is have a way in which we can run the SLM and get a response. I’m going to be using Ollama, as it’s a nifty little tool for working with models like this, and it can be run either as a standalone executable, or as a Docker container. Follow their guide on how to get it running on whatever host you have (I’m using Docker on my NUC).

Next, we’ll need to pick a model to use. Because I’m running this on a NUC, I don’t really have a GPU to play with, so I’ve decided to go with a pretty small model, Phi3 using the mini variant of it, which is only about 2gb of disk size and has 3.82B parameters. It’s not really that big, but given we’re going to be CPU-bound for this, we’ll have to make some tradeoffs (let’s just say, I won’t be running llama3.1:405b anytime soon 😅).

With everything setup, I can hit ollama with a request:

| |

Note: We set stream: false in the JSON payload to ensure we get the whole completion as a single response rather than a stream of responses. This is important because a streamed response isn’t really useful in this context.

And get back a response (this one took just over a minute to generate):

| |

Great, now let’s plug this into Home Assistant.

Home Assistant Integration

Home Assistant has an Ollama integration, but it’s not quite what I want. This is if you want to plug Ollama (or any other GenAI service) into Home Assistant as an Assistant, so you can ask it questions and get responses. I want to use it as a notification, so I can generate a message and send it to the kids (or really, any broadcast notification), which means I’m going to be using a RESTful Command to call the Ollama API. Here’s the configuration for that:

| |

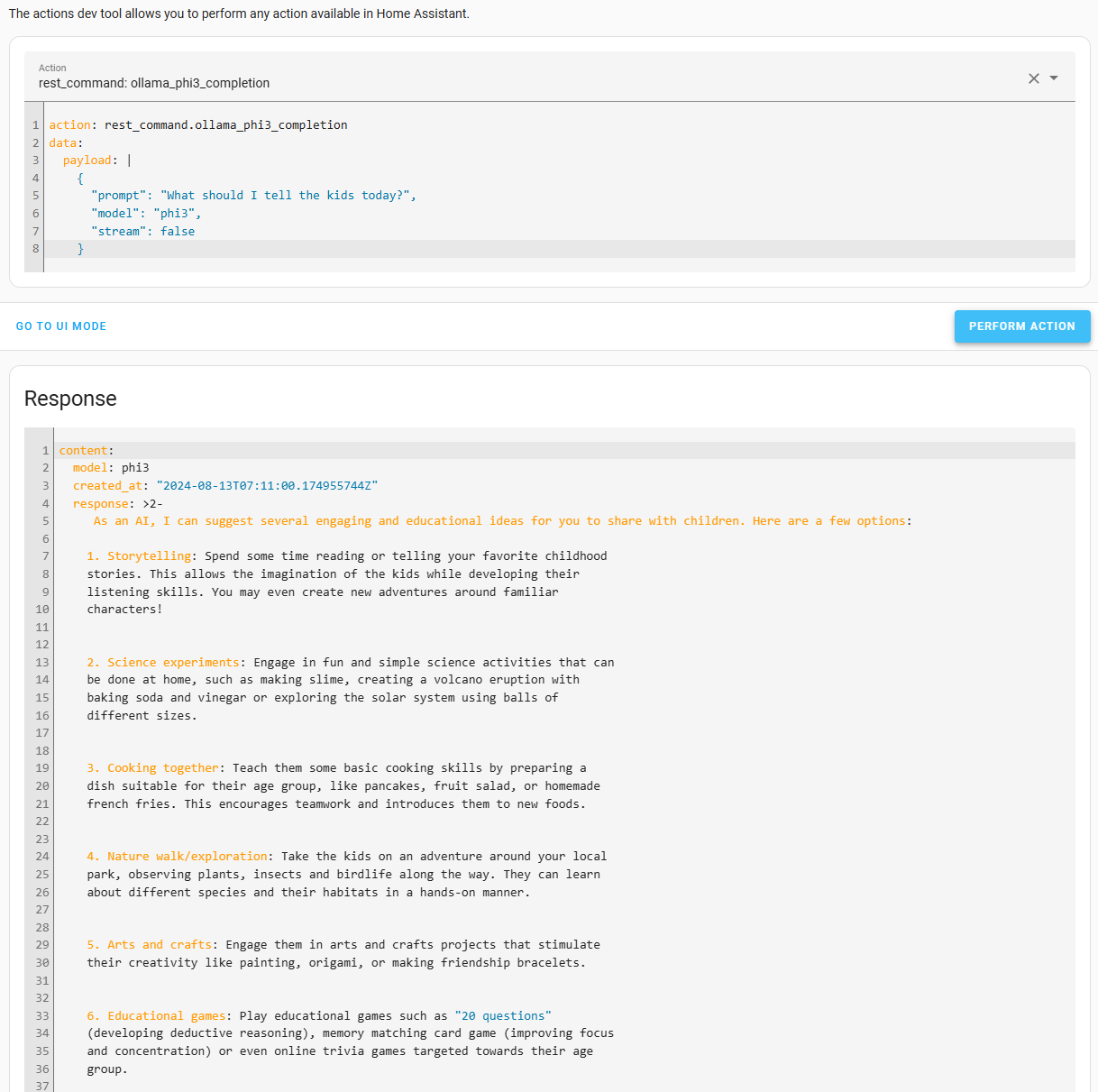

Reload the RESTful Command config and then there will be a new entity in Home Assistant, rest_command.ollama_phi3_completion, which we can call from anywhere that allows us to call an action. To test it out, navigate to the Developer Tools -> Actions page in Home Assistant, select rest_command.ollama_completion from the dropdown, and then enter the following into the Service Data field:

| |

And we can see a result:

Awesome, time to plug it into an automation.

Automation

I already have an automation that runs to generate the message that we then broadcast on the speakers around the house using a TTS (text-to-speech) service. I’m going to modify this automation to call the Ollama RESTful Command before calling the TTS service. But there’s a catch, the Ollama API can take a while to respond, from testing it can take a few minutes with a “real” payload size, so to keep the 8.30am notification on time, I’m going to run the Ollama API call at 5.30am and “cache” the response up until it’s needed.

Unfortunately, while Home Assistant has a input_text helper that we can use, it has a max length of 255 characters, which is not enough for the response we’re going to get back from Ollama, so instead, we’ll just have the automation wait around for 8.30am.

Here’s the automation:

| |

I’ve removed the actual payload from the automation, but you can see that we’re calling the Ollama API at 5.30am, storing the response in a response variable, then using the wait_for_trigger action to wait until 8.30am before broadcasting the message. If the Ollama API call fails, we just skip that child’s announcement. We also wait for the TTS service to finish before moving onto the next child’s announcement.

Example Run

Today, one of my kids didn’t have any “special” activities at school, so the message that was sent to the SLM was:

Create a friendly response that will announced over our house speakers in the morning to inform a child of their activities that they have today.

Keep it short, it should be announced in the space of about 1 minute.

The child's name is '<child>', and the following is a description of what their day involves.

## Description

There are no special activities at school today.

And the response back from Ollama was:

Good morning <child>! It's time to get ready for another exciting day ahead. School starts just like any other day with learning, playing, and making new memories. Remember, even without special activities, every day brings opportunities to discover something amazing. Have a fantastic day at school, buddy!

Conclusion

This was a fun little project to work on, and it’s a great example of how you can use GenAI in your smart home to make things a little more interesting.

I’ve had this running for a few days now (it was running in the background to test generation for a bit before I did the TTS and deprecated the original one) and the first time it ran I heard a call from my kids of “Dad, the house said something different today!” which is either a good sign, or a sign that Pavlov’s bell has been replaced with a new one 🤣.

There’s still a few tweaks I need to do in the context around the message, for example, it will sometimes return emojis which the TTS service then reads out (we had “sparkle smiley face” the other day), but that’s all part of the fun of working with GenAI.

It was also funny when we had school sport, as we use an acronym to refer to it, but that acronym the model doesn’t know about, so it made something up for it with… less that helpful results!

My next plan is to plug this into the automations that announce when our various appliances have finished the cycles (see Part 3 of this series on how I did that), so that we can get something better than “The washing machine has finished” 🤣.